Oh, yeah, this is TOTALLY fun! Didn't mean to imply otherwise.

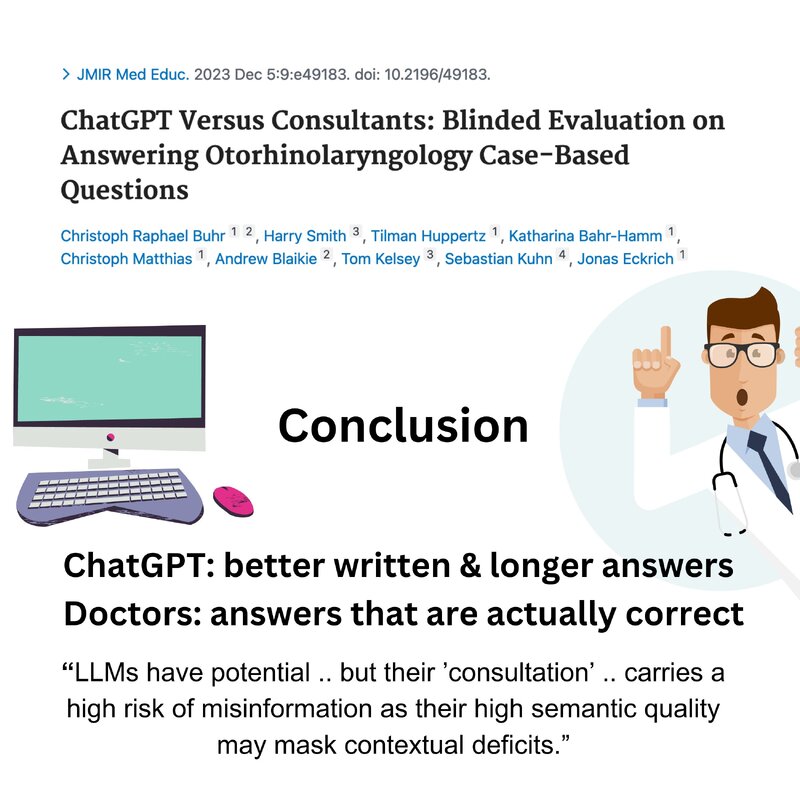

But the most important thing that we should ALWAYS keep in mind is that an LLM (as opposed to other AI applications) is designed NOT to give accurate information, but to CONFIDENTLY give information with excellent linguistic style, better than what most humans are capable of.

And that's the dangerous combination, lack of underlying content accuracy, but with a surface appearance which will lead anyone who doesn't have their own expert knowledge to assume that the content is correct.

Pretty much the same thing happened when websites came out. People would look at a well designed site and just assume that if it looked good, it was probably correct.

But the most important thing that we should ALWAYS keep in mind is that an LLM (as opposed to other AI applications) is designed NOT to give accurate information, but to CONFIDENTLY give information with excellent linguistic style, better than what most humans are capable of.

And that's the dangerous combination, lack of underlying content accuracy, but with a surface appearance which will lead anyone who doesn't have their own expert knowledge to assume that the content is correct.

Pretty much the same thing happened when websites came out. People would look at a well designed site and just assume that if it looked good, it was probably correct.